In this post, we are going to take a look at the different types of testing that can be used within our projects to dispel those illusions we tell ourselves as software developers, allowing us to deliver high-quality software to our customers.

“Testers don’t like to break things; they like to dispel the illusion that things work.”

Kaner, Bach, Pettichord - Lessons Learned in Software Testing: A Context-Driven Approach

Testing is a vast topic with many, many different types of testing. Guru99 has a post that lists over 100. This post will cover the various categories of testing types, how these categories interrelate and some of the most common types of testing used.

Categories of testing types

Before we can start discussing individual types of testing, we first need to look at the different categories of testing types:

Functional vs non-functional

-

- Functional tests - These tests check the software behaves as specified in the functional requirements. In other words, does the software do what it should?

- Non-functional tests - Used to determine how well the system performs under various expected and abnormal conditions. In other words, how well does the system perform? For example, does the system have acceptable performance under expected load and stress testing?

Black box, white box and grey box

-

- Black box testing (also known as behavioural testing) is performed without the tester having any knowledge of the internal implementation of the software.

- White box (also known as open or glass box testing) is the opposite of black box. Testers know the internal structure and implementation of the software and use this understanding to help develop test cases that exercise all the different paths through the code.

- Grey box is a hybrid between black and white box testing. Testers have a partial understanding of how the code is structured but test at the user level.

Manual vs automated

-

- Manual testing is performed by testers who individually perform the tests steps "by hand".

- Automated testing uses tools and scripts to automate testing without requiring manual effort.

Both approaches have their places in delivering high-quality software and have their pros and cons.

Unit, integration, system, regression and acceptance

-

- Unit testing - Usually, these types of tests are created by developers to test their code at an individual unit or component level. The unit represents the smallest part of the software that can be tested, for example, a method, and the test specifies a series of inputs and the expected outputs. A testing framework is used to execute the unit tests automatically and report on how many returned the expected output and which had unexpected results. To allow each unit to be tested independently, mock objects or stubs may be used to remove interdependencies with others.

- Integration testing - Individual units are combined and tested as a group to ensure that they work together as expected.

- System testing - Takes the components previously integration tested and runs testing across the system as a whole. This kind of testing identifies problems that may only become evident on a system-wide basis.

- Regression testing - Used to determine if any changes have created problems with previously working functionality. Depending on the coverage required, these tests may involve rerunning unit, integration or even system testing.

- Acceptance testing - The focus of acceptance testing is different from unit and integration testing. Rather than being focused on finding bugs in the system, it is used to assess how well the software meets the user's expectations and so is often referred to as User Acceptance Testing (UAT) and is therefore carried out by users themselves.

How do these categories of testing type relate?

As with many aspects of testing, often these categories are not particularly clear cut, and there may be overlap between them. Also, as touched on above, the tests are carried out by different roles (developer, tester, user) and for different purposes.

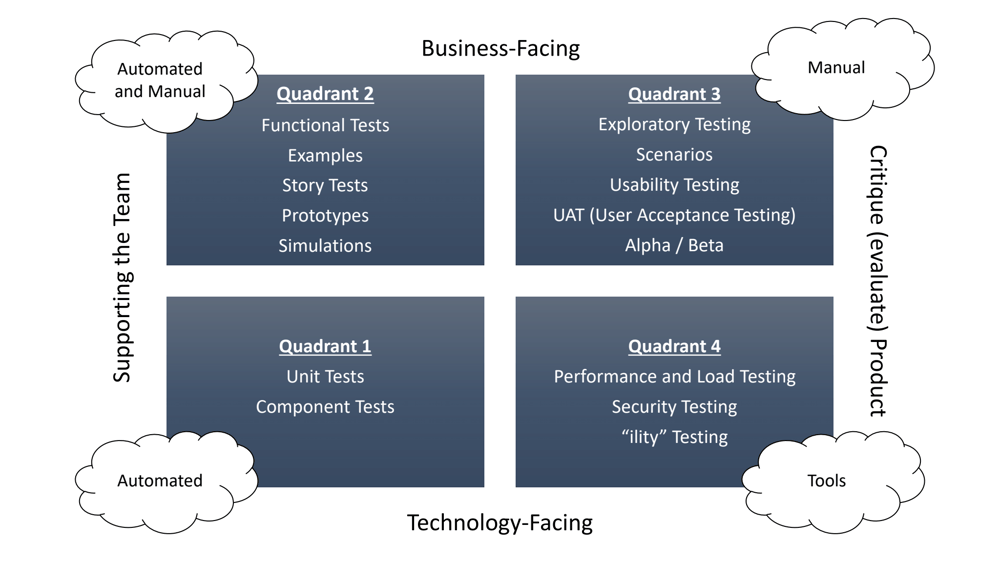

Fortunately, Brian Marick, one of the original authors of the Manifesto for Agile Software Development, developed the Agile Testing Quadrants diagram in a series of blog posts. Lisa Crispin and Janet Gregory expanded on this diagram in their book Agile Testing: A Practical Guide for Testers and Agile Teams.

This diagram can be our map to understand which roles carry out the tests, for what purpose and using which types of tools, automation or manual processes.

The diagram describes the testing process as follows:

- The testing process is split into four separate quadrants, labelled Q1 to Q4.

- The quadrant numbers do not represent a waterfall-style order in which the tests should be run, but are only used to refer to a specific part of the diagram.

- The clouds at the corners of the diagram represent the most common approaches used for tests in those specific quadrants. So Q1 tests are usually automated, Q2 a mixture of automated and manual, those in Q3 are typically manual, and finally, Q4 may use specific testing tools to test performance or security, for example.

- The quadrants represent two different comparisons:

- Supporting the team vs critique the product: The left-hand side quadrants (Q1 and Q2) concern preventing defects during and after coding, whereas the right-hand side critiques or evaluates the product against defects and missing features.

- Business-facing vs technology facing: The top two quadrants (Q2 and Q3) are concerned with tests that are immediately obvious to the business users. The bottom two (Q1 and Q4) are tests which are more meaningful to the development team. For example, a business user may not immediately be able to interpret the specific detailed results of a performance test but obviously would recognise if the software was unacceptably slow to use.

- In Agile projects, most teams start with specifying Q2 tests and develop Q1 tests as the code as the developers write it. Q3 exploratory testing can help refine requirements if they are uncertain and Q3/Q4 tests verify the software product is going to acceptable to the users.

- Usually, most projects will involve tests from across all four quadrants.

- There are no hard and fast rules about which type of test falls in which quadrant but the taxonomy can be used to facilitate conversations within teams about the testing approaches to adopt.

Testing types by quadrant

Q1: Technology-facing tests that guide development

The lower left quadrant focusses on the quality of the code being developed and represents test-drive development (TDD) using automated unit and integration tests.

Example testing types:

- Unit tests

- Integration tests

Q2: Business-facing tests that guide development

The tests in this quadrant also support the development team but from a higher, business or customer level perspective against the documented requirements and stories from a user's point of view.

Both Q1 and Q2 tests are used to give rapid feedback to the development team to allow fast troubleshooting as development progresses. Therefore Q2 tests should also be automated if possible/practical.

Example testing types:

- Functional testing

- Scenario-based testing

- User experience testing using prototypes (possibly paper-based or wireframes).

- Pair testing

Q3: Business-facing tests that critique (evaluate) the product

Q3 tests are used to validate working software against the business user or customer's expectations. This type of testing is manual and may highlight areas where the documented requirements or stories may be incorrect or incomplete.

Example testing types:

- Exploratory testing

- Usability testing

- Pair testing with customers

- Collaborative testing

- Acceptance testing

- Business acceptance testing (BAT)

- Contract acceptance testing (CAT)

- Operational acceptance testing (OAT)

- Alpha testing

- Beta testing

- User acceptance testing

Q4: Technology-facing tests that critique (evaluate) the product

These tests are technology focussed but are no less critical for successfully delivering high-quality software.

One of the criticisms of agile development is that these tests are often overlooked or left to the end of the project, where the cost of resolving issues is much higher. These oversights may be due to agile's emphasis on having stories written by customers or from the customer's perspective. These non-technical team members may defer these requirements to the development team, who in turn may just focus on delivering the stories as prioritised by customers.

Tests that evaluate these performance criteria should be included from the beginning of the project to avoid the costs of having to address them late in the process. Automation should be used and if available specific tools used to evaluate the software against these performance metrics.

-

Performance testing

- Load testing

- Stress testing

- Soak testing

- Spike testing

- *ility testing

- Stability testing

- Reliability testing

- Scalability

- Maintainability

- Scalability

- Security testing

- Penetration testing

Conclusion

We have touched on the various different categories and types of testing in projects and the part the Agile Testing Quadrants diagram can play in helping us work together as software-development teams to deliver high-quality software.

We hosted a webinar that looks at the challenges associated with managing testing:

Test Management for global IT implementations with Jira and Zephyr

Are you managing a large scale IT implementation? How are you managing the scope of your tests? Do you have visibility over all the testing being done and it's progress? Do you have remote teams and testers, and are they all collaborating effectively?

Listen in to this webinar with Laurence Postgate and Chris Bland as they discuss the problems of managing testing in global IT implementations.

Related Posts

Mitigating Common Defects Found While Product Testing

In this guest blog Sanjay Zalavadia, VP of Client Services at Zephyr, describes how to mitigate...

Top 3 root causes of agile project failure and how to avoid them

In this guest post, Sanjay Zalavadia, VP Client Services at Zephyr, identifies the top 3 causes of...

Understanding types of performance testing

In this guest blog Sanjay Zalavadia, VP of Client Services at Zephyr, describes different types of...